Since recently I have a Supermicro Node Server at our hands which we are planning to use for a hot-standby system. A vital point for replication and hot-standby is a reliable and fast network connection between the nodes. The Supermicro nodes implement an Infiniband Mellanox network adapter, which claims to provide transfer rates of up to 40GBits / second. This networking standard seems to have the following advantages:

- It’s reasonably priced, adapters and cables are available at affordable prices, switches are affordable, too (and are available on eBay)

- It’s very fast – 40GBit/s sounds very promising, so it’s 4 times 10Gbit Ethernet, but cheaper

As Infiniband is not that common I decided to sum up my findings in this article in order to simplify the implementation process for others.

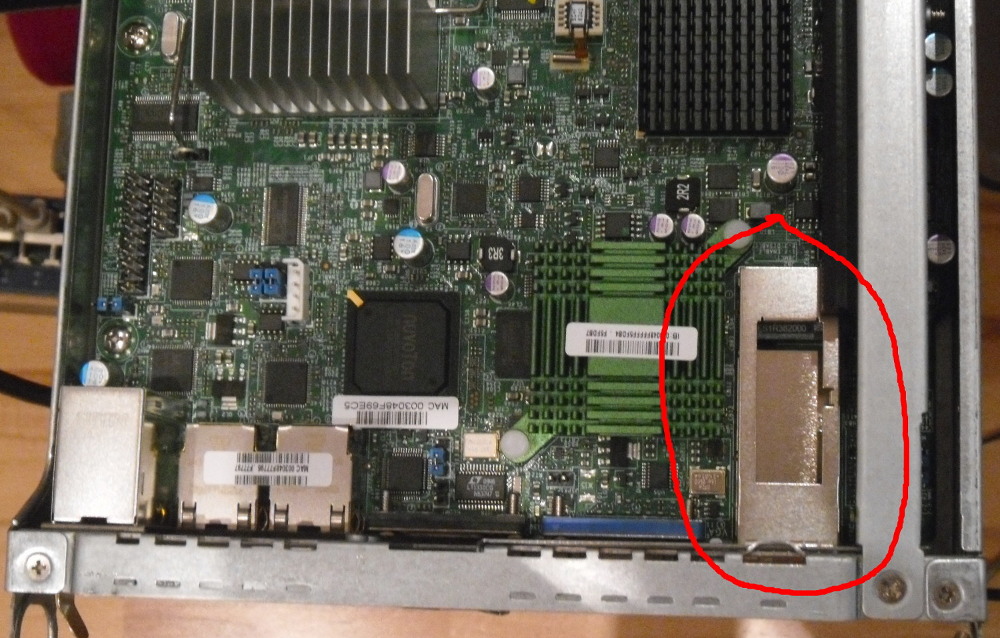

Below one can see the mainboard with the mellanox interface.

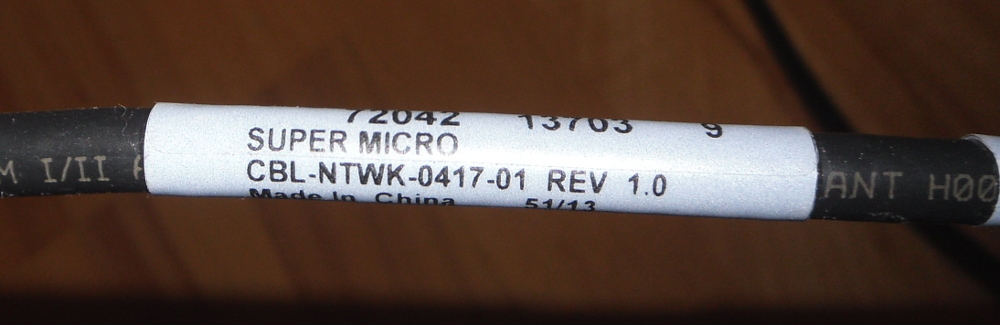

The first task was to find appropriate cables for this interface. After some digging around, It became clear that this interface needs some kind of transceiver mounted at the end of the cable which transforms the data for the media (either for copper wires or for a fiber signal). The standard used here is called “QSFP” and is available directly from Supermicro for an acceptable price. The cable looks like the following:

Debian-Linux Configuration

Now that the hardware was sorted out, the next step was to install the networking interface on Debian Linux. Fortunately, there is an Internet page that describes all necessecary steps: https://pkg-ofed.alioth.debian.org/howto/infiniband-howto.html. Following this guide, I installed the following packages from Debian wheezy instead of rebuilding them from source:

- infiniband-diags – InfiniBand-Diagnoses Programs

- ibutils – InfiniBand network utilities

- mstflint – Mellanox firmware burning application

So a simple “apt-get install ibutils infiniband-diags mstflint” provides me with some interesting tools. In order to be able to use these tools, some kernel modules have to be loaded manually, which is done by adding the following to /etc/modules:

mlx4_ib # Mellanox ConnectX cards # # Protocol modules # Common modules rdma_ucm ib_umad ib_uverbs # IP over IB ib_ipoib # scsi over IB ib_srp

After loading the required modules on both nodes, the tool “ibstat” reported the following:

# ibstat CA ‘mlx4_0’ CA type: MT26428 Number of ports: 1 Firmware version: 2.6.200 Hardware version: a0 Node GUID: 0x003048ffffce4c68 System image GUID: 0x003048ffffce4c6b Port 1: State: Initializing Physical state: LinkUp Rate: 40 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x02510868 Port GUID: 0x003048ffffce4c69

Well, that looked nice, unplugging the cable led to another Status: “Physical state: Polling”, so that seemed that the hardware was working. The above guide told me that I needed to use a so-called “subnet manager” in order to get the connection up and running – especially when directly connecting two nodes without a switch, however this is needed on one of the two nodes only. It seems that all that has to be done is to install the package “opensm” on one of the nodes via “apt-get opensm”.The link could then be checked with “ibstat” and reported “Active”, which sounded good, too. Moreover, “ibhosts” looked good, too:

# ibhosts Ca : 0x003048ffffce4c68 ports 1 “MT25408 ConnectX Mellanox Technologies” Ca : 0x003048fffff62008 ports 1 “MT25408 ConnectX Mellanox Technologies”

IP Configuration

Due to loading the module “ib_ipoib”, I now had a network interface:

# ifconfig -a ib0 Link encap:UNSPEC Hardware Adresse 80-00-00-48-FE-80-00-00-00-00-00-00-00-00-00-00 BROADCAST MULTICAST MTU:2044 Metrik:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 Kollisionen:0 Sendewarteschlangenlänge:256 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

The rest was simple – do a simple ifconfig on both machines:

node 1 # ifconfig ib0 10.0.1.1 netmask 255.255.255.0 node 2 # ifconfig ib0 10.0.1.2 netmask 255.255.255.0 # ping 10.0.1.2 PING 10.0.1.2 (10.0.1.2) 56(84) bytes of data. 64 bytes from 10.0.1.2: icmp_req=1 ttl=64 time=0.850 ms 64 bytes from 10.0.1.2: icmp_req=2 ttl=64 time=0.150 ms 64 bytes from 10.0.1.2: icmp_req=3 ttl=64 time=0.145 ms

So, the link was up and running!

Performance testing

Of course, the next interesting thing would be to test the performance of the link! The idea was to simply use FTP an transfer some huge file:

# ftp 10.0.1.1 Connected to 10.0.1.1. 220 ProFTPD 1.3.4a Server (Debian) [::ffff:10.0.1.1] Name (10.0.1.1:root): user1 331 Password required for user1 Password: 230 User user1 logged in Remote system type is UNIX. Using binary mode to transfer files. ftp> dir 200 PORT command successful 150 Opening ASCII mode data connection for file list -rw-r–r– 1 root root 9555161083 Jun 6 12:47 testfile.dat 226 Transfer complete ftp> get testfile.dat local: testfile.dat remote: testfile.dat 200 PORT command successful 150 Opening BINARY mode data connection for testfile.dat (9555161083 bytes) 226 Transfer complete 9555161083 bytes received in 38.69 secs (241180.6 kB/s)

O.k., so the resulting speed is 241MByte / second, these are roughly 2.5 GBit. Hmmm. This is only about 6% of the 40 GBit link!

Hmmm, so let’s investigate this further with iperf:

# iperf -s ———————————————————— Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ———————————————————— [ 4] local 10.0.1.2 port 5001 connected with 10.0.1.1 port 35933 [ ID] Interval Transfer Bandwidth [ 4] 0.0-10.0 sec 9.87 GBytes 8.47 Gbits/sec

Well, that looks way better, although we are still at roughly 25% of the possible network link. The reason for the slow FTP link may either be the inefficient protocol for the link or in buffering / reading / writing to the underlying harddrive.

The following page has some interesting findings on Infiniband performance on Linux:

https://techs.enovance.com/6285/a-quick-tour-of-infiniband

A good start for optimizing performance would be to download the latest driver, update firmware and stuff like that – but I’ll keep it as it is, a 10GBit-Link is basically all I was hoping for. So all in all setup was not too complicated, all needed tools were provided within the Debian distribution, so yes, Infiniband is a good alternative to 10GBit Ethernet!

Subnet Manager

In case one uses a switch which does not provide a subnet manager, one needs to use “opensm” as described above. One needs to start opensm on one node, however, if this node goes down, the subnet manager is absent. Therefore it makes sense to start opensm on every node. However, multiple instances of opensm will conflict unless the priority of the opensm daemon is correctly set. This is done as follows (for Linux Debian):

- The configuration is normally not needed, there is no configuration file except in /etc/default/opensm, where you can only set the appropriate ports.

- The configuration file can, however, easily created as follows:

# cd /etc/opensm # opensm -c opensm.conf

Now the configuration file can be edited, whereas the parameter “sm_priority” has to be set accordingly on each machine.